Where do your children’s digital footprints go? Ade Adewunmi, Strategy and Advising Manager at Cloudera Fast Forward Labs, talks to Tech For Good about why data rights for children should be at the top of the agenda

The next generation is growing up in a digital age. What benefits and dangers does this entail?

There are many benefits to growing up in the digital age. Access to digital technologies has made it possible to connect and form broader friendships and has facilitated access to an infinite amount of information, to name just two such benefits. The digital age is also influencing the way we learn and work – mostly for the better.

Some companies have identified opportunities for capitalising on the vast amounts of personal and behavioural data being generated, by using it to inform their customer segmentation strategies. By extension, this allows them to offer more tailored products and services and, in some cases, attract advertising revenue.

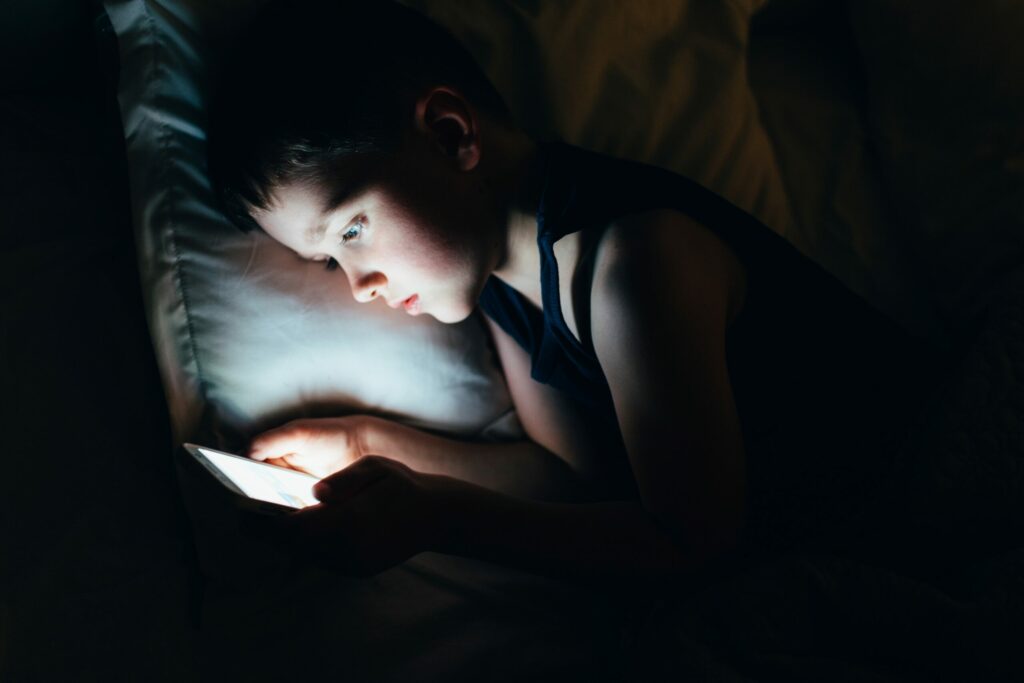

This approach can and has led to product design decisions that prioritise constant use both to maximise data collection and to increase opportunities for surfacing advertising. The challenge is that many younger users of these data-centric digital products are likely to be unaware of these underlying motivations. DefendDigitalMe, in its recent report on the use of metaphors in data policy, notes: “Children are being ‘datafied’ with companies and organisations recording many thousands of data points about them as they grow up.”

Young people could be sharing their data without fully understanding the risks of doing so. Even if they are aware of the risks they still might not always be able to protect the data because avoiding engagement with the offending applications or platforms may not be a realistic option. In the same report, DefendDigitalMe highlighted the frustration felt by young people about their lack of agency over how data about them is used.

What’s more, parents aren’t always aware of all the ways their children’s data is being collected or how to protect their data rights. There are undeniable benefits of growing up in the digital age, but there are also risks. This is why it’s so important to regulate the ways that companies and government institutions use children’s data.

How is children’s data being misused online?

I think most people will agree that children and, by extension, their data should be protected. While there are many legal protections in place to protect children, these safeguards don’t always extend to their data. A number of well-publicised data breaches have highlighted how vulnerable data is to being stolen or misused, and children’s data is no exception.

In 2019, news broke that gambling companies had been using data from the Learners Record Service (LRS) for age and identification purposes. The LRS, which is operated by the Skills Funding Agency, collects data relating to learners registering for relevant post-14 qualifications such as GCSEs, AS and A2 Qualifications etc. It contains personal data of tens of millions of people who have passed through state schools as children. According to LRS’s own privacy statement this data should be used only “by organisations specifically linked to … [record holder’s] education and training”. The Sunday Times article that broke the story stated that as many as 12,000 organisations have access to the LRS database.

The outcry around the data breach resulted in the Information Commissioner’s Office (ICO) conducting a mandatory audit of the Department for Education.

It’s not all doom and gloom though. As the UK’s data regulator, the ICO has introduced a new code of conduct, the Age-Appropriate Design Code. This code directs tech companies making applications and operating platforms that are used by children to design their products in ways that protect children’s privacy and reduce their exposure to inappropriate advertising.

This is a step in the right direction but it doesn’t address all the ways in which children’s data is captured. More needs to be done. Ideally, companies and institutions operating in this space will take proactive steps to protect children’s data even before additional regulation is introduced. There is an opportunity here to make this a source of competitive advantage.

What impact has the pandemic had in attitudes towards children’s online safety?

The pandemic-induced lockdowns and the disruptive impact it has had on children’s education has intensified interest in data-centric edtech technologies. It has also meant more time online for children whose usual physical extracurricular activities have been curtailed. Broader use of data-centric digital technologies in places like schools and more screen time for children has brought more attention to the potential harms this can pose to children. This is a keen area of interest for researchers like Dr Jun Zhao and activist organisations such as the 5Rights Foundation. Dr Zhao is involved in longitudinal research, publishing academic papers on her research.

However, despite these types of efforts to educate and inform, we are seeing the pandemic being used as a justification for controversial decisions about appropriate use and collection of children’s data.

An example of this is the well-publicised case of nine schools in North Ayrshire in Scotland that sought to incorporate Facial Recognition (FR) software into their school meals verification processes. The schools claimed that this was a necessary step to create a more COVID-secure environment by speeding up queues and reducing the need for physical contact (they used to use a fingerprint scanning system). Complaints to the ICO about disproportionate (biometric) data collection have meant the regulator has stepped in to review. Although the company that installed the FR system has stated that the biometric data will be held on schools’ servers, it is worth noting that the adoption of this system introduces a new data breach risk and could make schools more of a cybersecurity target.

Meanwhile in the US, schools are being criticised for installing intrusive tracking software on school-issued laptops. Remote learning has meant more school children are using such devices.

Children – and adults – leave digital footprints as they navigate the online world, often without realising. Why is data literacy important to help improve children’s online safety?

The importance of data literacy cannot be overstated. Whenever we surf the web, engage on social media platforms or use apps on our phone, we are making digital footprints. And there really isn’t a way to fully prevent this.

Helping children to understand how their online interactions are tracked, how far such data might travel, who might have access to this data about them and how that might be used to make decisions that can affect them, is really important.

It’s also important to help them understand what ‘levers’ are available to them so they can better shield themselves while online as well as how the rights they do have allow them to refuse to give up – or at the very least challenge demands for – personal data. This type of data literacy helps them develop agency and a sense of autonomy over their digital footprint. This is important as current trends mean this generation of young people will spend much of their lives navigating an increasingly data-centric world.

How can parents introduce these complex issues to their children, especially when, often, they don’t understand them either?

The pace of change can mean that parents – and not just children! – might struggle to fully understand what data protections they can expect or how expansive our digital footprints can be. As a result, they might feel ill-equipped to discuss these things with their children.

However, the truth is no-one can be completely knowledgeable about these things. I’d encourage parents and guardians to approach the work of helping their children and guardians as a journey of exploration. One they can take alongside their children.

This involves asking their children questions about which social platforms are they signed up with? What educational resource platforms are they using? What are they posting online? This will help parents understand their child’s internet usage and ensure they are best placed to advise their kids on how to avoid sharing sensitive data online or reduce the risk of their data being misused.

What steps is Cloudera taking to raise awareness about this issue or improve its products to better protect children?

At Cloudera our mission is to make data and analytics easy and accessible for everyone. In celebration of ‘International day of the Girl’, we hosted a panel discussion around data literacy, with experts in the field. Cloudera has also created and published a children’s book called ‘A Fresh Squeeze on Data’ in partnership with education organisation ReadyAI.

The book aims to help children between the age of 8-12 understand how problems can be solved through the use of data. It covers concepts such as machine learning model training and data bias in a way which is fun and engaging. A free e-version of the book is downloadable on FreshSqueezeKids.com and there are also other handy and useful resources available on the site.

The under-representation of girls and women in STEM careers increases the risk that products that inadvertently discriminate against girls and women will be created. This is why the panel spent time discussing the risks of gender-related bias in machine learning applications. Data holds immense value and we hope that discussing and understanding how it is used in everyday activities can hopefully spur further curiosity.

Where do you see society going in the next three to five years when it comes to data rights?

There will hopefully be better enforcement of existing regulations designed to protect people’s data rights and facilitate redress when things go wrong. GDPR, for example, was a massive gamechanger when it comes to data protection. However, without adequate enforcement there is a risk that it becomes ineffectual at deterring inappropriate data practices. As the public has become more aware of how organisations and institutions collect and use data about individuals, the appetite for using regulation like GDPR to seek redress is growing.

Having said that, growing public awareness about the risks associated with inappropriately applied algorithmic decision-making means that members of the public are becoming more demanding of explanations about when and how algorithms are being used to inform decisions that impact them. This is why algorithmic transparency has become more of a focus for policy makers.

Another topic that is being considered and discussed in greater depth is the auditing of algorithm-enabled applications. The researcher Deb Raji explores this brilliantly in a recently released paper. I think in the coming years smart organisations will be more open to commissioning and publishing these types of audits as a way of reassuring their customers as well as relevant regulators.

Is there anything else you’d like to add?

Yes, I’d encourage any parents to look up the 5Rights Foundation, which was set up by Baroness Beeban Kidron, to better help protect the rights of children online. They have done some fantastic work in this area, and are pushing for legislative changes and industry changes to the digital world to ensure it caters for children and young people.

Additionally, Defend Digital Me, a civil society organisation, is also doing amazing work in this area.