In the last 20 years of digital design, I have seen the technological landscape change beyond recognition. The products that existed in the early 2000s, from the internet to the smartphone to streaming, have seen more iterations and phases than I could have imagined. But I am often interested in the decisions behind these iterations that drive this innovation.

Good user experience (UX) is the typical response. As tools and technologies mature, they seek to improve consumer experiences. But when more than two-thirds of UK adults with visible or invisible disabilities feel excluded from products or services due to accessibility issues, our approach to UX is still far too narrow.

Differently abled people have had to be incredibly resourceful, using screen readers, automatic speech recognition (ASR), chatbots, and countless other extensions to navigate a landscape that wasn’t built with them in mind. It’s impressive, but it shouldn’t be the case.

With AI disrupting so much of what we know to be true, we are at an inflection point. Nothing is set in stone, and we have thousands of new, AI-powered possibilities in front of us. Everything is ripe for innovation.

Rather than simply tinkering with our current tech, we have the power to build new, more inclusive products tailored directly to the users who have missed out on so many of our creations in the past.

Time to reinvent the wheel

I’d like to propose that the new urgent driving force for innovation be accessibility. By consciously catering to marginalised communities and reimagining how to make products for more people, we allow ourselves to break out of the mould to something new and exciting. It will enable us to reinvent experiences meaningfully.

Last year, Canon worked with the Royal National Institute of Blind People on an exhibition. Through sound, touch, and Braille, World Unseen showcased how those with sight loss experience art. In doing so, it created an immersive, unique, and educational experience designed for everyone to enjoy, including sighted attendees.

Technologies built to allow access can open doors for everyone. As subtitling software has grown and improved to deliver real-time, AI-powered access for deaf viewers, this has fed directly into better transcription technology in corporate meetings or allowed us to watch a funny reel even when we’ve left our earphones at home.

A growing opportunity

Technology’s rapid development allows us to do more than simply build an access ramp for disabled communities to join everyone in the joys of digital creativity. By working closely with people with disabilities, we can meaningfully improve their lived experience with solutions, and iterate to ensure they mature along with the underpinning technologies.

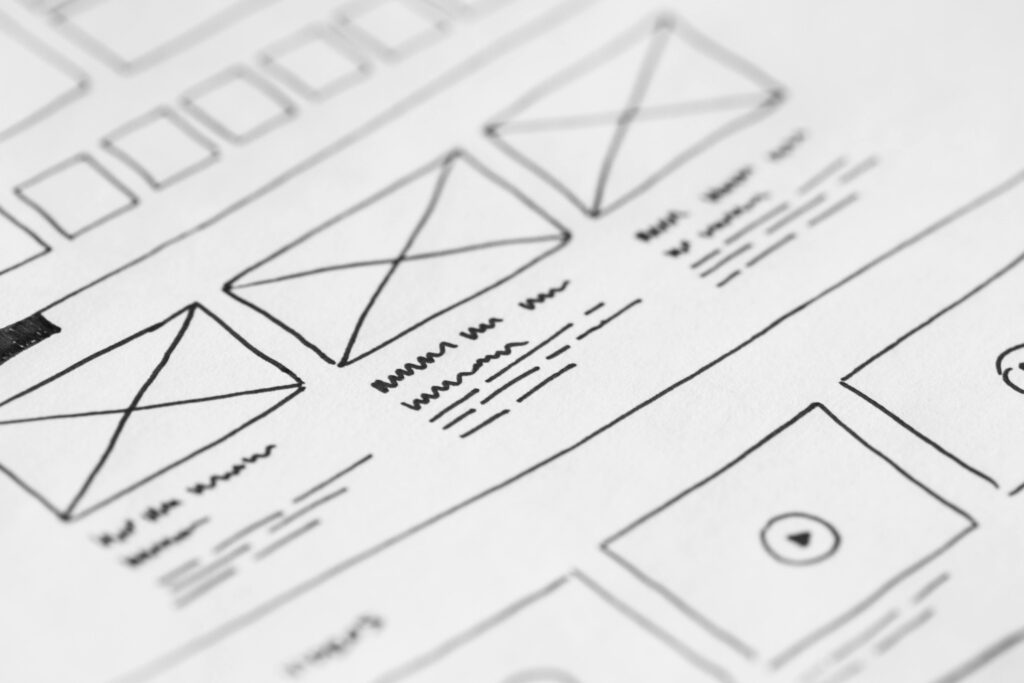

During the pandemic, my team and I started experimenting with hand-tracking technology, designing programs that would allow people to order from screens without the need to touch them. In this solution we found another entirely new solution that could help the deaf and and hard-of hearing community who are often underserved by society and technology.

People want to make the world better; they just need to be empowered to do it and to learn how.

Working with the American Society for Deaf Children, we built a platform that used playful animations and hand-tracking to teach the alphabet in American Sign Language (ASL). It was a fascinating technical challenge and won several awards, but mostly, it was a chance to contribute something genuinely useful. Crucially, the technology behind it kept maturing. With the help of NVIDIA, we applied our learnings to a much more complex challenge: Signs, a comprehensive ASL learning platform.

The challenge when building Signs was three-fold. We needed a front-end that could emulate signs to learners and give real-time feedback on their attempts. We would need a dataset, supplied by a community of deaf and hearing sign language users and interpreted through AI technology. Lastly, we needed a movement, inspiring signers to contribute continually so that our program was as dynamic and alive as the language itself.

User experience was critical to the first challenge. The cartoonish illustrations of Fingerspelling.xyz gave way to a sleek avatar that talks to the learner and employs colours and points to keep users engaged and motivated to learn more. Different aspects of Signs appeal to others; we all react to things that look visually pleasing and feel effortless, but the technology still wows me. I make a sign to my laptop screen, and the avatar sees, mimics, corrects, or praises me. It feels like magic.

For this to work, we needed to educate ASL users. People want to make the world better; they just need to be empowered to do it and to learn how. Through GiveaHand.ai, we told the public (signing and not signing) a story: that a few short images could feed into an expanding data set and bring a language to life for thousands of new learners. As AI improves and more signers contribute, so does the platform’s ability to recognise and teach increasingly complex signs.

The results have been incredible. The platform reached over 20 million people at launch, and in the first 14 days, Signs has taught an impressive 50,000 signs.

It takes a village - and a vision

The intersection of technology and accessibility is among the most exciting frontiers for innovation today. Whether through AI-powered tools, immersive sensory experiences, or entirely new ways of engaging with digital content, we can build a world where inclusion is not an afterthought but a driving force.

Samsung’s research estimates that brands ignoring accessibility lose access to a spending power of over £274 billion annually—but they risk losing much more than this. After all, when we rethink accessibility, we discover new and better ways of doing things. It’s good for society and business.

Anders Jessen

Anders Jessen is Founding Partner at Hello Monday/DEPT®